On March 21, 2024, in a bold regulatory move, Tennessee Governor Bill Lee signed the Ensuring Likeness Voice and Image Security (“ELVIS”) Act (Tenn. Code Ann. §47-25-1101 et seq.) – a law which, as Gov. Lee stated, covers “new, personalized generative AI cloning models and services that enable human impersonation and allow users to make unauthorized fake works in the image and voice of others.” The ELVIS Act, which becomes effective on July 1, 2024, is notable in several respects. Beyond its expansion of the Tennessee right of publicity statute to include protections for musicians’ and others’ voices from the misuse of AI, the Act contains a few notable provisions, including a novel provision that creates a cause of action against a person that distributes an algorithm or technology whose “primary purpose or function” involves creating a facsimile of a person’s voice or likeness without authorization, thus potentially subjecting certain technology providers to liability.

While the ELVIS Act appears to be one of the first statutes to address AI-related right of publicity issues in the generative AI (“genAI”) era, it should be noted that back in 2019 New York State amended its right of publicity law (S.B. S5959-D, 2019-2020 Reg. Sess.) (“S.B. S5959-D“) to prohibit persons from using a “digital replica” of a “deceased performer” in certain ways without authorization in a manner that is “likely to deceive the public into thinking it was authorized.”[1] Under S.B. S5959-D, any person who creates such a computer-generated digital replica, as defined, that is then used in scripted audiovisual works, live performances of musical works, or advertisements would be liable for damages (there are exceptions for certain expressive and artistic activities and certain requirements surrounding intent to deceive the public).[2]

This post will discuss some notable aspects of the ELVIS Act (particularly with respect to AI tools), some recent litigation involving publicity claims over unauthorized use of voices and images and conclude with mention of recently passed AI-related laws in Utah and Colorado.

GenAI and the Right of Publicity

New developments in genAI – artificial intelligence which utilizes deep-learning, large-language models, and user-based input to synthesize completely new content – have taken the legal field by storm. Large language models are “trained” by inputting large amounts of data consisting of public domain material, licensed content and other content principally scraped from the web. The methods of genAI training have spawned multiple litigations that touch on various IP infringement and related issues, including whether the use of copyrighted text to train genAI models is considered fair use. Beyond these traditional IP issues, development and use of genAI tools have also presented issues related to right of publicity.

The scope of right of publicity varies depending on jurisdiction. Generally, state right of publicity laws provide a form of redress for those personalities or covered persons who have suffered economic and/or reputational harm when their image has been used for certain commercial purposes without authorization. However, most state laws have not been updated to expressly cover the unauthorized use of AI tools to exploit a person’s publicity rights. (Note: the issue of whether any state’s existing right of publicity statute, other than those previously discussed, would provide a legal cause of action for AI-related deepfakes or other related unauthorized digital facsimiles of a person’s likeness or voice is beyond the scope of this post). Until a federal AI deepfakes bill is passed (e.g., H.R.5586, “DEEPFAKES Accountability Act”), we are left with the patchwork of state publicity laws to apply to unauthorized AI-created versions of people’s likeness and voice. Tennessee’s ELVIS Act seeks to address this gap.

1. The ELVIS Act: Changes of Habit

Previous Tennessee law – largely considered to be expansive – did not specifically afford any right of publicity protections to individuals’ voices. With genAI technology becoming more accessible, however, the state revisited its statutory law. In the past year, developers released genAI technology allowing individuals to create music, lyrics, and videos, and emulate the voices of performers, all within a matter of minutes. While some of these capabilities represent an amazing tool for budding creatives in the music industry, the software’s ability to convincingly replicate voices also presents concerns for professional songwriters, musicians and the industry itself.

The ELVIS Act provides a way forward for individuals whose right of publicity has been violated in this way. Under the statute, which amends the existing right of publicity law, those who knowingly create voice replicas, which are “readily identifiable and attributable to a particular individual, regardless of whether the sound contains the actual voice or a simulation of the voice,” and are used for various commercial purposes, without prior consent, may be liable for a misdemeanor and in a civil action, unless certain fair use-style exceptions apply.

Regarding digital voice replicas, the ELVIS Act also creates a cause of action against a person who “distributes, transmits, or otherwise makes available an algorithm, software, tool, or other technology, service, or device, the primary purpose or function of […which] is the production of a particular, identifiable individual’s photograph, voice, or likeness.” At present, the statute does not provide clarity on the scope of this provision, and due to the statute’s recent passage, no court has yet to interpret what types of general purpose or more specific AI tools might violate the statute and how to determine what an AI tool’s “primary purpose” is. Let’s take a simple case. One could envision a court holding that a single function malicious AI tool expressly advertised to create deepfake voices and images to perpetuate online fraud or disinformation would likely violate the ELVIS Act. However, general use AI tools – and certainly ones from reputable providers – typically have multiple purposes and functions, thereby creating an open issue as to whether such a tool would fall under the ELVIS Act. Some litigants might consider a primary purpose one which the developers explicitly advertise for their AI products. Still others may not consider a purpose to be primary unless the technology exclusively and intentionally facilitates a statutory violation.

2. GenAI-Related Publicity Litigation

The use of genAI tools with voice functions has already resulted in noteworthy buzz, if the press coverage of actress Scarlett Johansson’s recent complaint about a certain AI voice is any indication. In addition, there are multiple cases against AI developers over the use of copyrighted content for genAI training purposes. While these copyright suits have garnered the most attention, right of publicity cases are starting to arise in this age of AI.

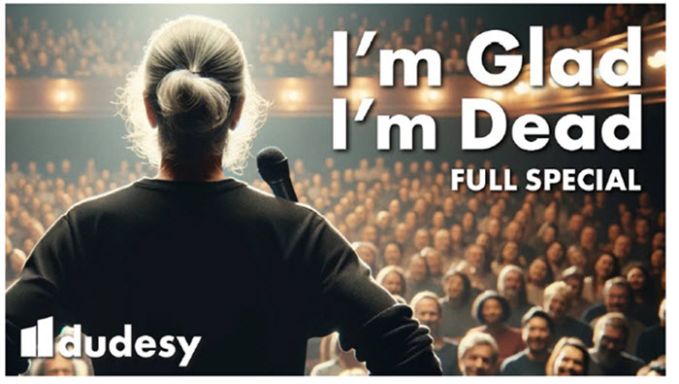

For example, in a prior dispute over AI voice impersonation this past January, the estate of comedian George Carlin filed a lawsuit against the creators of the Dudesy podcast for allegedly using genAI to write and perform jokes based off of Carlin’s previous performances and impersonate the late comedian’s voice for a new one-hour YouTube comedy special entitled “I’m Glad I’m Dead.” (Main Sequence, Ltd. v. Dudesy, LLC, No. 24-00711 (C.D. Cal. Filed Jan. 25, 2024)). The creators of this genAI technology were also included as John Doe defendants in the suit. Though the podcast opened with a disclaimer that the ensuing special was the work of genAI (it was later revealed that the Carlin style jokes themselves were not written by AI), the estate found the AI-aided “resurrection” of Carlin was an unauthorized “piece of computer-generated click-bait” and stated the defendants had not received permission to use Carlin’s likeness or voice. [See image of the Special from the complaint].

The estate brought a complaint alleging copyright infringement and right of publicity claims.

The complaint stated that the defendants allegedly inputted thousands of hours of Carlin’s original routines to train an AI machine “to fabricate a semblance of Carlin’s voice and generate a Carlin stand-up comedy routine.” This training process, the Plaintiffs asserted, should constitute as making “unauthorized copies of the copyright work.” The Carlin estate purportedly suffered further injury when the genAI created a credible voice replica of Carlin, all in an alleged attempt to “exploit George Carlin’s identity for profit.”

Ultimately, the case settled in April 2024. As part of the settlement, the podcast’s hosts agreed to remove the special from their website and mention of it from social media accounts. They were also permanently enjoined from using Carlin’s image, voice, or likeness on the Dudesy podcast, or in any content on any platform, without the written permission of Carlin’s estate and family.

In another ongoing suit in California, a former contestant from the reality show Big Brother, Kyland Young, commenced a putative class-action suit against software developer NeoCortext Inc. (“NeoCortext”) for the use of individuals’ images and likenesses in its face-swapping app Reface. (Young v. NeoCortext Inc., No. 23-02496 (C.D. Cal. Filed Apr. 3, 2023)). Among other functions, Reface allows users to swap their faces with those of public figures in popular scenes from media to create amusing images, GIFs or videos, or even animate photos with AI technology. The Plaintiffs claim that Reface was “trained” through scraping data from videos, television shows, and films from the internet and that the Reface app offers a searchable catalogue of available images to swap faces with. NeoCortext offered a free version of the app – which placed watermarks on the resulting videos – and a paid version, which did not. Plaintiff brought California right of publicity claims against NeoCortext, stating that NeoCortext failed to obtain his permission to include his face in the Reface database of available swappable images and has not offered to pay Plaintiff any royalties for the commercial use of his image. Plaintiff also claims that NeoCortext uses Plaintiff’s and other individuals’ identities to promote Reface’s paid subscription option, asserting that animated images of Plaintiff can be found using the Reface search bar.

Plaintiff’s complaint seeks damages for the alleged right of publicity violations and an injunction barring NeoCortext from using class members’ images for commercial purposes. NeoCortext filed a motion to dismiss the suit under the anti-SLAPP statute, which protects persons against suits intended to deter protected rights of petition and speech.

Ultimately, however, a California district court declined to dismiss the suit. It found that, at this stage, NeoCortext had not shown that the First Amendment bars Young’s right of publicity claim. The case remains ongoing, and in fact, NeoCortext has appealed the lower court’s decision to the Ninth Circuit.

In another notable recent lawsuit, two voice actors brought a putative class action against Lovo, Inc. – a company that offers a text-to-speech subscription service that allows customers to use AI-driven software to generate voice-over narrations – claiming that Lovo “misappropriated their voices to create millions of voice-over productions without permission or proper compensation” and that Lovo had “no permission to use Plaintiffs’ voices for training its AI Generator…or to market voices based on Plaintiffs’ voices.” (Lehrman v. Lovo, Inc., No. 24-03770 (S.D.N.Y. Filed May 16, 2024)). One plaintiff alleges that voicework he had previously done for another company “for research purposes only” was later used by Lovo for AI training and “to market voices based on plaintiff’s voices” and that AI creations based on his voice were now being used by various entities for voice-over roles that he had no knowledge about and had received no compensation for. The Plaintiffs brought various claims related to the alleged unauthorized use of their voices, including right of publicity, consumer claims, fraud, and violations of the Lanham Act.

In addition to these litigations, there have also been multiple incidents in the news where celebrities’ likenesses have been spoofed using AI tools to attempt to sell a product or for other unauthorized uses. Beyond right of publicity issues, such incidents raise the question of whether commercialized use of such genAI content might constitute false endorsement under the Lanham Act or other related state law violations.

Final Thoughts

Recently, the lack of regulation on genAI has begun to shift. At some point this year, we will likely have some early rulings in the various copyright infringement cases over genAI training practices and perhaps in some of the ongoing AI-related right of publicity suits. Moreover, the FTC has stated its intention to use its authority to continue to regulate in the space. State privacy agencies, created with the passage of comprehensive state data privacy laws, have also begun to prepare regulations surrounding “automated decisionmaking” by certain algorithms.

Some state legislatures have already passed laws covering deepfakes generally and the use of deepfakes in elections or political ads. Others are debating AI deepfake bills and related protections for consumers, as well as bills related to the overarching regulation of AI (e.g., California’s SB 1047). Interestingly, on March 13, 2024, the Utah Governor signed Senate Bill 149, which became effective on May 1st. S.B. 149: (i) provides a legal definition for generative AI;[3] (ii) revises the state’s consumer protection law to include the use of genAI;[4] (iii) creates a state-run Office of Artificial Intelligence Policy; and (v) allows for actions against individuals who commit violations of the statute. Crucially, S.B. 149 also requires an individual or business who “uses, prompts, or otherwise causes generative artificial intelligence” to interact with a consumer (in a way regulated under state consumer laws) to disclose such genAI use, but only if asked by the user; a similar disclosure obligation is mandatory “when an individual interacts with AI in a regulated occupation.”[5] The disclosure in question needs to be provided at the start of an exchange – verbally when in a conversation, and via messaging when in a written exchange. Moreover, in late May Colorado Governor Jared Polis signed AI legislation (S.B. 205) that imposes new regulations on developers and deployers of high-risk AI systems to use reasonable care to avoid algorithmic discrimination; the Colorado law also contains some transparency provisions requiring a deployer of a consumer-facing AI system to disclose that the consumer is interacting with AI, unless the interface makes such a fact obvious to the reasonable person.

The issue of AI and right of publicity is also being debated in Washington. The U.S. Senate held a subcommittee hearing this spring on the bipartisan NO FAKES Act, which would create a new federal digital-replica right and prohibit the publication, distribution, or transmission of an unauthorized digital replica.

Lawmakers will need to strike a careful balance to ensure that providers can continue to innovate while still respecting individuals’ publicity rights over their (literal & figurative) voices and allowing individuals to use the technology to enhance productivity and social media interactions. Even though few states have amended their laws to specifically address the use of AI tools to exploit an individual’s name, image, likeness or voice, the environment still contains certain risks of right of publicity litigation, even with fair use-style protections enshrined in many state laws. Much remains unknown. For now, however, we’ll keep watch and report as genAI continues to invent and reinvent itself.

[1] The law defines a “digital replica” as an “original, computer-generated, electronic performance…in which the individual did not actually perform, that is so realistic that a reasonable observer would believe it is a performance by the individual being portrayed and no other individual. A digital replica does not include the electronic reproduction, computer generated or other digital remastering of an expressive sound recording or audiovisual work consisting of an individual’s original or recorded performance, nor the making or duplication of another recording that consists entirely of the independent fixation of other sounds, even if such sounds imitate or simulate the voice of the individual.”

A “deceased performer” means “a deceased natural person domiciled in this state at the time of death who, for gain or livelihood, was regularly engaged in acting, singing, dancing, or playing a musical instrument.”

It should also be noted that in 2022 Louisiana passed “The Allen Toussaint Legacy Act” (S.B. 426, Act No. 425), which expands the state’s right of publicity law to include, among other things, protections against “misappropriation of identity,” where “identity” includes “an individual’s name, voice, signature, photograph, image, likeness, or digital replica.” “Digital replica” is defined as a “reproduction of a professional performer’s likeness or voice that is so realistic as to be indistinguishable from the actual likeness or voice of the professional performer. “

[2] Unlike the ELVIS Act, however, the New York law states that a use shall not be considered likely to deceive the public if there is “a conspicuous disclaimer in the credits of the scripted audiovisual work, and in any related advertisement in which the digital replica appears, stating that the use of the digital replica has not been authorized by the person or persons specified […]”.

Also note: S.B. S5959-D also contains a provision allowing a private right of action for unlawful publication of nonconsensual pornographic images of an individual. As for the use of AI to create deepfake pornography , New York passed a separate law in September 2023 (S.B. 1042A) to amend the penal law to make it unlawful to disseminate or publicize intimate images created by digitization.

[3] Under the Utah law, “Generative artificial intelligence” means an artificial system that: (i) is trained on data; (ii) interacts with a person using text, audio, or visual communication; and (iii) generates non-scripted outputs similar to outputs created by a human, with limited or no human oversight.

[4] Under the Utah law, “it is not a defense to the violation of any statute administered and enforced by [the Division of Consumer Protection] that generative artificial intelligence: (a) made the violative statement; (b) undertook the violative act; or (c) was used in furtherance of the violation.

[5] Under the law, “a person who uses, prompts, or otherwise causes generative artificial intelligence to interact with a person in connection with any act administered and enforced by [the Division of Consumer Protection] shall clearly and conspicuously disclose to the person with whom the generative artificial intelligence, if asked or prompted by the person, that the person is interacting with generative artificial intelligence and not a human. The law also provides that a person who provides the services of a “regulated occupation” shall prominently disclose when a person is interacting with a generative artificial intelligence in the provision of regulated services.